The Future of Artificial Intelligence: Stories

You may have heard of Sophia – the humanoid robot who uses AI and facial recognition to imitate human gestures and facial expressions. She can answer some basic questions and even maintain a simple conversation. What Sophia lacks is the ability to understand social or cultural context. She can talk about the weather, but not about society. One of our researchers is hoping to change that.

Artificial intelligence, designed to get machines to do intelligent things, is not new – it’s been around for nearly 70 years. Siri, Alexa and that robot vacuum that sweeps your floors are all examples of AI. Like humans, these machines are undergoing an evolution and the next level for them is to able to understand stories.

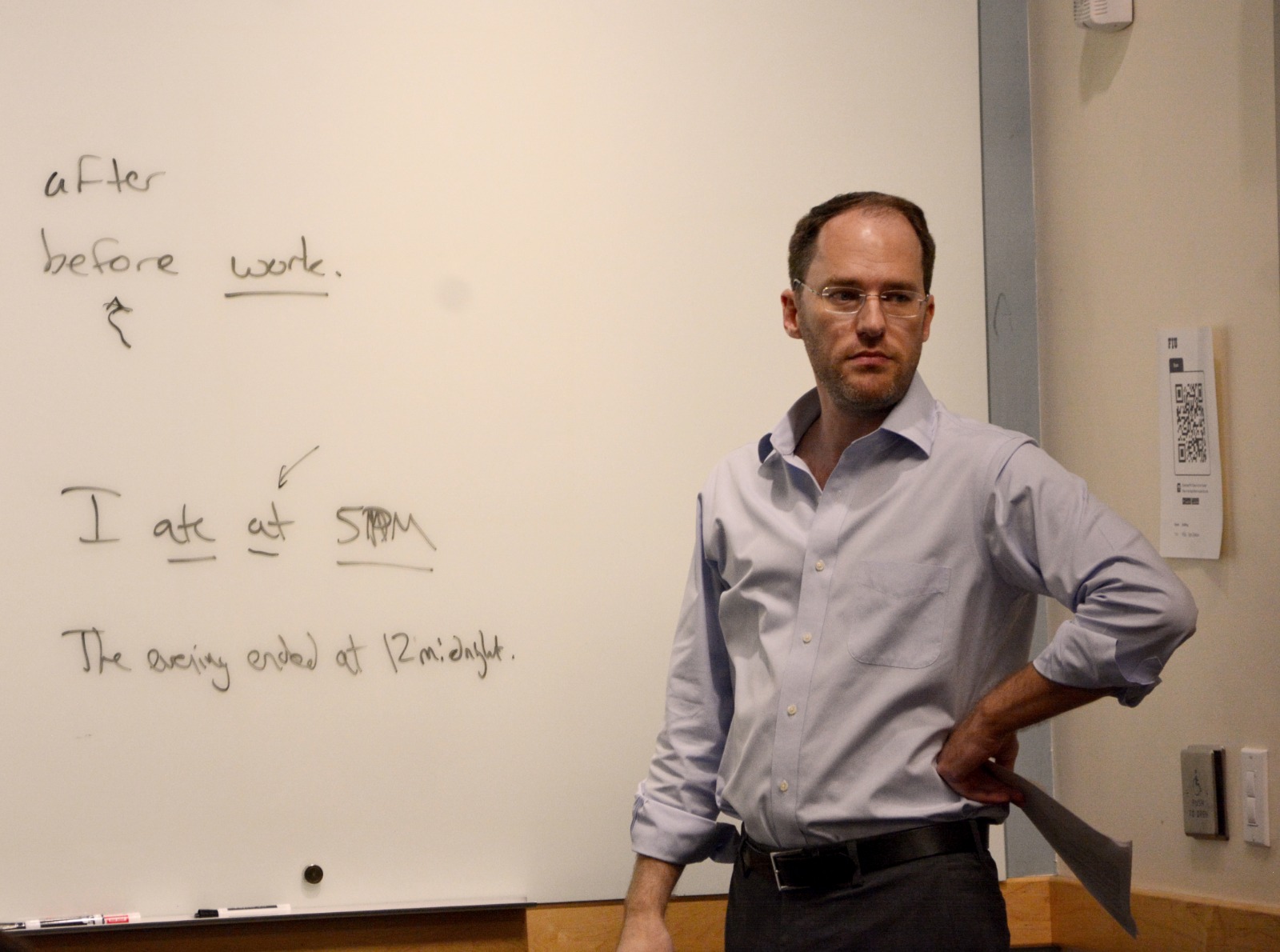

Mark A. Finlayson, an assistant professor in the College of Engineering & Computing’s School of Computing & Information Sciences is focusing on developing technologies that will allow AI to understand narratives so they can become contextually aware and reason intelligently about the world. Computers in the near future will be able to achieve tasks such as educating doctors and assessing the artistic quality of a novel.

A robot vacuum, for example, can be programmed to explore a room to clean the floor. But it doesn’t know how to detect when it gets stuck. But what if you were to place it on the lawn? Or upside down? It would still happily vacuum away, because it has no understanding of context. For it to work, it has to be within its carefully limited sphere of design. For the technology to get better, machines will have to become more flexible in understanding context and when they are in a novel situation they are not designed for. They will need to understand more about the world to know to pass off control to another system or a person or whether they should try to “learn” their way out of it.

“A machine doesn’t know anything about how Americans view themselves differently from Russians — for example, that Americans have an American Dream that often guides how we think and react to the world,” said Finlayson. “We have all sorts of subtle assumptions about how the world works, and it dramatically affects how we behave.”

Teaching machines these things is not an easy feat. Finlayson, who is a National Science Foundation CAREER Award winner, looks at language and stories as carriers of cultural and social information. Stories express things like cultural values and beliefs to how people react in certain situations, many of which will vary culturally.

Currently, AI systems can understand and respond to simple language: AI can grammatically analyze sentences, identifying nouns and verbs, and figure who or what is the subject or object of the sentence. But high-level cultural messages are lost. For the next two years, Finlayson has a $450,000 grant from the Defense Advanced Research Projects Agency (DARPA) to research how to extract what are called motifs as part of his work in the Cognition, Narrative, and Culture Laboratory (Cognac). Motifs are small bundles of important cultural information that are often identified by key phrases: for example, the idea of a troll under a bridge or a golden fleece. After teaching AI to identify these motifs, he plans to track them as people employ them (such as in social media) and model how they are used in context so as to allow machines to better mimic human cognition.

Finlayson’s research has various real-world applications, for example, in the domains of education that incorporate story-driven learning, such as law, business and medicine. Lawyers often learn about the law through cases, and doctors learn often about medicine through medical case studies.

The work also has applications in getting machines to think ethically. “Ethics is often not about a set of hard rules,” said Finlayson, “but rather about reasoning from stories. People think: should I help this person? What would a Good Samaritan do in this situation? That’s reasoning by reference to a story.”

The ethical dilemma is most dramatic when applied to warfare. Weapons traditionally have needed someone to operate them – until recently they have not been independent systems that could analyze a situation and make a decision to pull the trigger. However, as weapons, such as drones, become more autonomous and independent, there is a desperate need for them to reason ethically and understand when they need to ask a human for help. If Finlayson’s research is successful, that may change. These devices may have situational awareness and the ability to assist decision makers to make the best possible choice given the context.

“Machines are not moral agents now, and so a person always needs to make the ultimate decision,” said Finlayson. “For machines to be truly independent moral agents, we would need true artificial consciousness, which many AI. researchers believe won’t be achieved for at least 50 to 100 years.”

Written By Millie Acebal

Co-written with Mark A. Finlayson

Source: The future of artificial intelligence: stories | FIU News – Florida International University